Now we'll try to implement game objects' reaction on our own manual input (mouse for instance). The main part is how to determine which game object the mouse is pointing at. We'll use the same approach as in previous chapter. Unlike in collision detection we'll calculate object's sizes/position not in world X-Z plane, but in X-Y screen plane.

Source code: https://github.com/bkantemir/_wg_411

* Just in case, executable demo (Windows) here.Windows Defender will complain, but that's to be expected, since this is not an approved source of executable files.

Now, back to the topic: Start Visual Studio and open CPP/a996rr/pw/pw.sln project.

Case 1: 3D objects on the screen

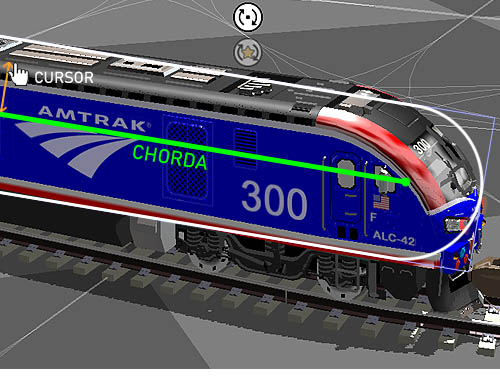

Let's say we want to grab and drag locomotive here:

How program supposed to detect is mouse on the locomotive or not?

Well, the first thing that comes to mind is to check bounding box:

Obviously, being inside the bounding box does not automatically mean hitting the object (as shown above).

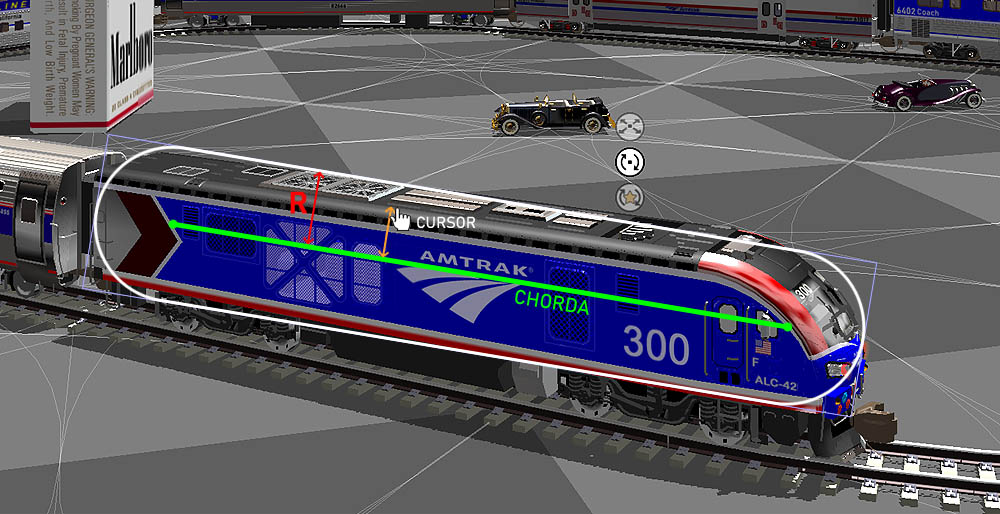

Hitting the projection of original (source) bounding box - YES, that would work:

However, checking such arbitrarily oriented rectangle is a rather challenging and somewhat cumbersome task. Instead, I am calculating the longest axis projection (I called it "CHORDA") and a radius R as the shorter axis bisected:

So, now the task comes down to calculating the distance from the cursor to the chorda. If less than chorda's R - then we are in.

Implementation:

- In the very beginning, in the main.cpp we're calling CPP/p_windows/input.cpp -> initMouseInput() to initiate mouse input callbacks. Plus to upload several different cursors. This function is Windows-specific, Android will require different implementation. These callbacks will record mouse events into our own TouchScreen::touchScreenEvents array for consequent processing.

- Original (source) bounding boxes: when loading models in ModelLoader::loadModelStandard() we are calling buildGabaritesFromDrawJobs() function that fills structure gabaritesOnLoad in the SceneSubj object.

- During the game, in each frame we need to calculate models bounding boxes' screen projections: in the TheApp::drawFrame(), when scanning our SceneSubjs, I'm calling Gabarites::fillGabarites() (line 290) to fill out SceneSubj's gabaritesOnScreen structure for consequent use.

- Plus we're calling ui/TouchScreen::getInput() (line 217), which checks cursor position, finds matching SceneSubj (if any), and decides which action to call.

Case 2: buttons (2D)

Even easier. Since they are 2D, don't even need to pre-calculate "chords" or bounding boxes. If distance from cursor is less than button radius - then hit. Structure is much simpler than SceneSubj, so they are organized in a separate class UISubj. Still there is a set of interactivity-related functions common with SceneSubjs, such as:

- isClickable()

- iisDraggable()

- isResponsive()

- onFocus()

- onFocusOut()

- onLeftButtonDown()

- onLeftButtonUp()

- onDrag()

- onClick()

- onDoubleClick()

- onLongClick()

To make both 2D UISubjs and 3D SceneSubjs equally accessible in TouchScreen class, this functionality is declared separately in a new ScreenSubj class, a parent for both UISubj and SceneSubj classes.

Case 3: dragging background (a game table)

The challenge here is to figure out 3D point on the table corresponding to 2D cursor's screen position. Solution is to build 3D line (ray) from camera to the table passing through screen cursor position. Implemented in TheTable::getCursorAncorPointTable().

Key steps:

- Calculate cursor screen position in OpenGL terms (in -1 to 1 range) for x and y, and +1 for z (most distant point)

- Calculate inverted matrix for mainCamera's View-Projection matrix

- Apply this inverted matrix to GL-formatted cursor position. Resulting vector is world coordinates of the most distant point of the ray from camera through the cursor.

- Having camera's coordinates and ray's most distant point - calculate 3D line equation

- Find ray's intersection point with table's surface. Bingo!

Android

Start Android Studio and open CPP/a996rr/pa project.

The difference from Windows solution is how we read the input. Instead of mouse events, we will obviously use touch events. We don't even need special listeners here. CPP/p_android/platform.cpp -> myPollEvents() receives all the related events directly. Then we need to convert them to our TouchScreen::touchScreenEvents array, the rest is handled by the shared code described above.

One issue that deserves special attention:

Of course, this solution heavily relies on touch events like AMOTION_EVENT_ACTION_DOWN and AMOTION_EVENT_ACTION_UP (finger on and out of screen). Don't know who to blame: Android, GameActivity, Samsung (in my case) or whoever makes screens for them, but anyway: these key events are often missed. So, I added a special extra code to track this: CPP/p_android/platform.cpp -> myPollEvents(), from line 178.

The idea is:

If we have some touch-screen events when assuming that we don't have any fingers on screen, it means that we (device) overlooked AMOTION_EVENT_ACTION_DOWN.

And vice-versa: we assuming that we DO have a finger on screen, but there are no any incoming events for a while. Means - perhaps AMOTION_EVENT_ACTION_UP overlooked.

- In newer Android Studio this problem was solved, new function android_input_buffer* inputBuffer = android_app_swap_input_buffers(pAndroidApp);